In today’s world, production efficiency can be improved by maximizing the time that the machines are operational through predictive maintenance, or by predicting the distribution of future time-to-failure using raw time-series data. So, in a previous blog post, KAISPE LLC introduced predictive maintenance capabilities in its solutions, which briefly discussed how we include predictive maintenance capabilities in IoT web portal to help customers proactively maintain their business assets.

To demonstrate the predictive maintenance solution, we will walk through the Predictive Maintenance of Air Compressors using Azure Machine Learning service step by step. We will train the machine learning model on remote computing resources, and the Azure Machine Learning workflow in the Python Jupyter notebook, as a template to train our own machine learning model with our own data.

Let’s set up development environment and create a workspace

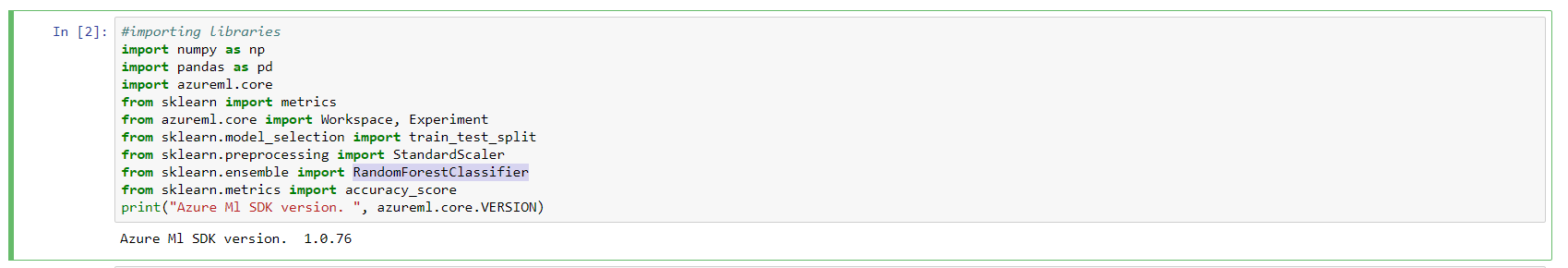

1- Set up a development environment:

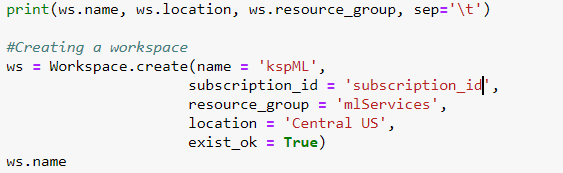

2- Create a workspace

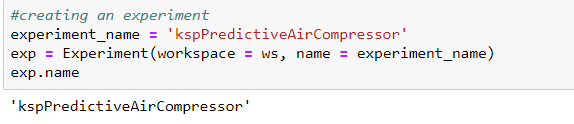

3-Create Experiment

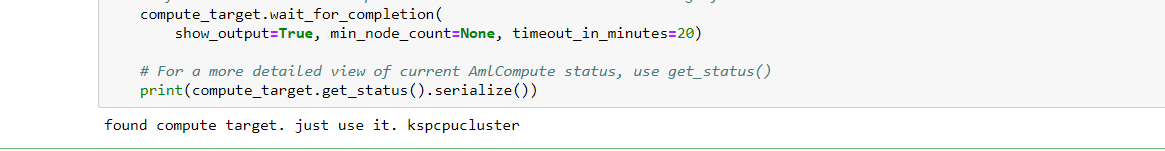

4- Create or attach an existing compute target

Note: You can also create the compute resources in the Azure Portal

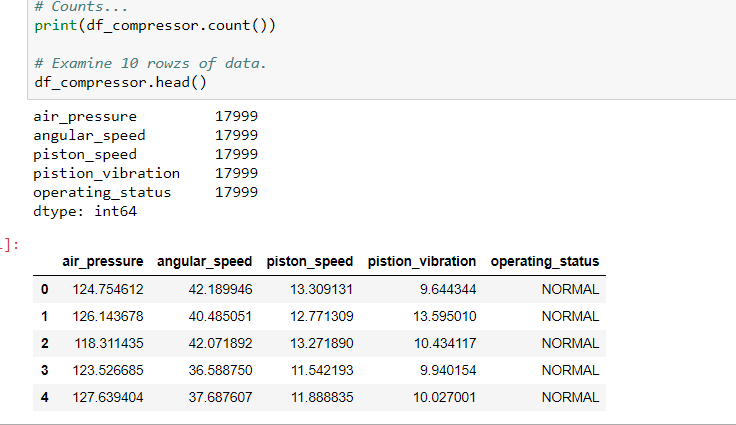

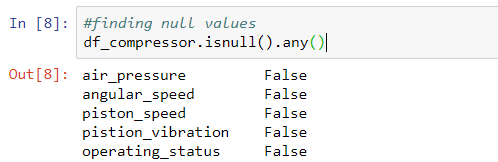

In the next step we will verify the dataset and upload it into the cloud, so that the cloud training environment can access it. We save the model training data to a csv file.

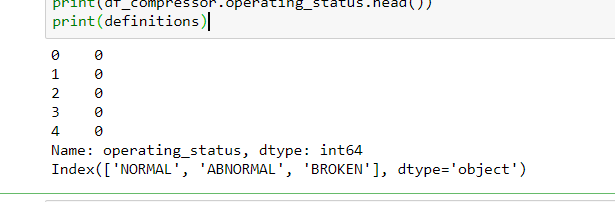

So, let’s convert our label column values from [‘NORMAL’, ‘ABNORMAL’, ‘BROKEN’] to [0,1,2]. This is an essential step as the scikit-learn’s Random Forest can’t predict text.

Next, we will be training our model on a remote cluster where we have to submit the job to the remote training cluster you set up earlier. To submit a job you will perform these tasks:

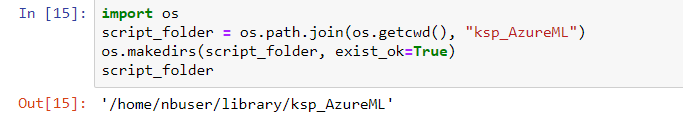

- Create a directory

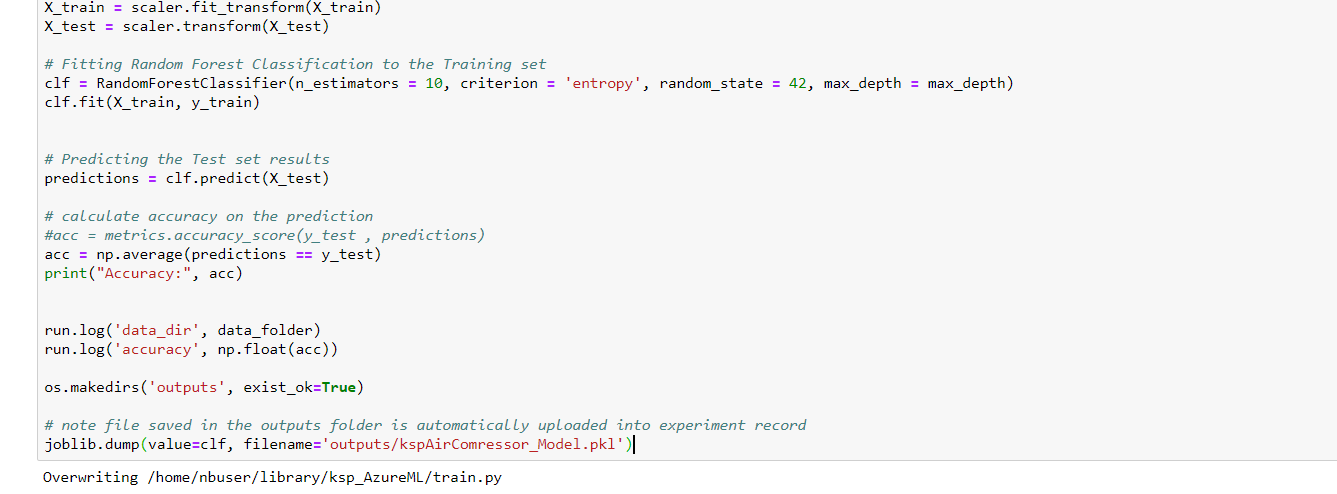

- Create a training script

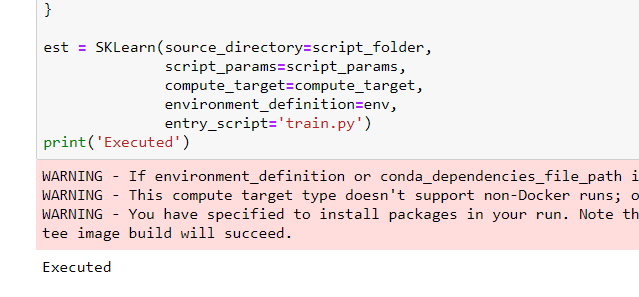

- Create an estimator object

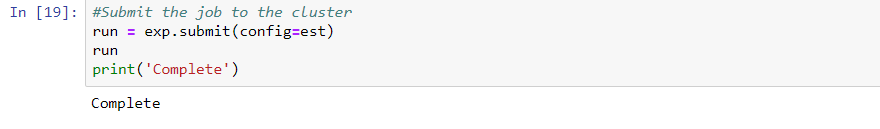

- Submit the job

So let’s create the directory to deliver the necessary code from your computer to the remote resource and created a training script

Now, we create an estimator and submit the job to the cluster

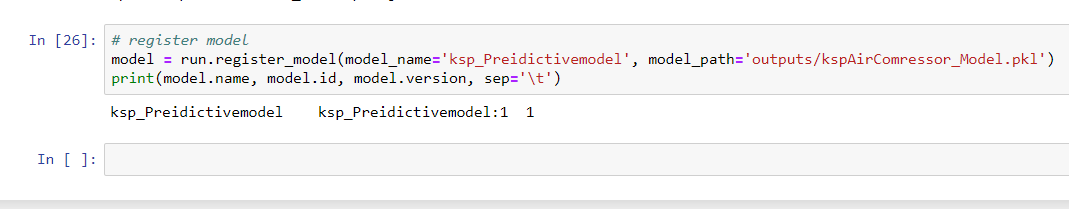

In last we will register a model in the workspace so that you or other employees can later query, examine, and deploy it.

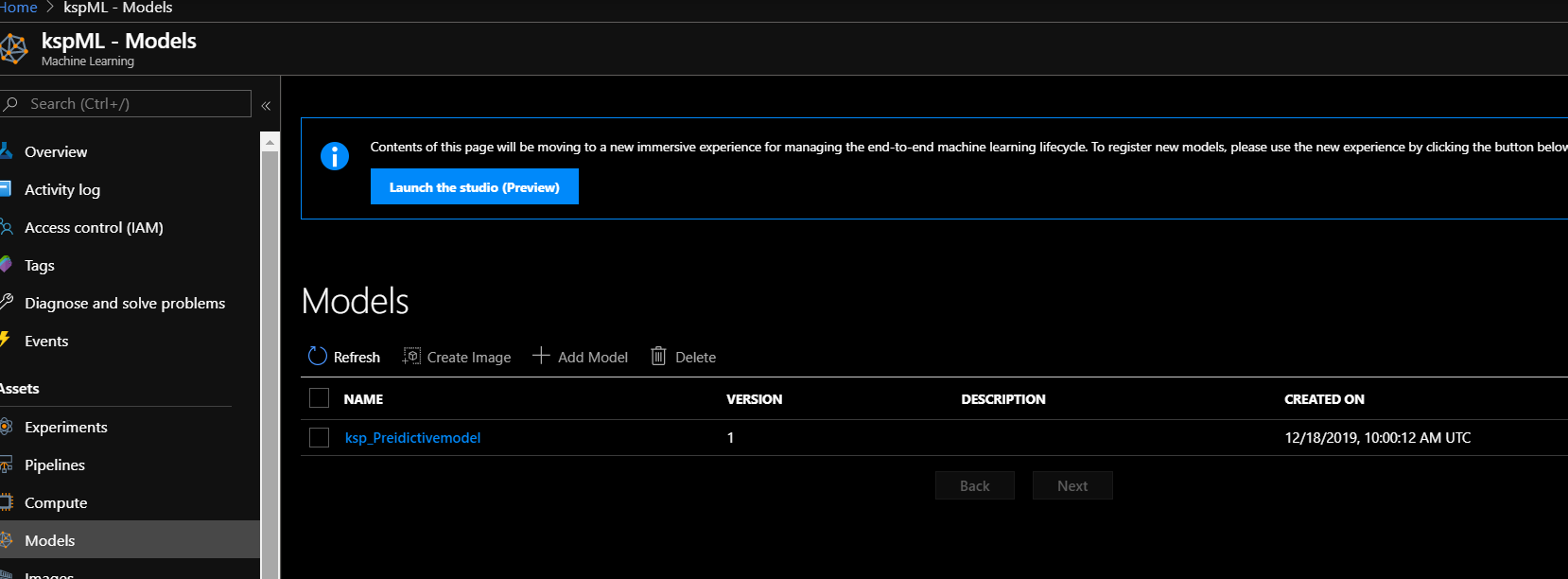

Finally we can see the run experiment and register model on Azure Portal.

I hope you found this blog post helpful. If you have any questions, please feel free to contact [email protected].